April 23, 2021

Infrastructure as Code: a Script for Creating S3 buckets in AWS via CloudFormation

How to get started with this AWS resource.

Amazon S3 was the first service from AWS that allowed users to store objects via the web interface. It provided key functionality such as high availability, scalability, security, performance and fault tolerance.

Learn how CDW can assist you with your AWS needs.

Within the context of AWS, the S3 service is commonly used as the object storage for many use cases such as disaster recovery, backup and restore, Big Data analytics, data lakes, data marts, Amazon machine images, snapshots of EBS volumes and web applications.

Getting Started with S3 Bucket Creation

In a majority of the cases, the first step in the AWS cloud journey would be starting with the creation of an S3 bucket and uploading image files or code to the newly created S3 bucket via the AWS management console. The problem with the web interface is that the user needs to go through a number of UI screens to configure different properties of the S3 bucket created. This approach is prone to error for creating many buckets with the similar properties at scale.

Using APIs or SDKs to create an S3 bucket may also create problems, especially when there are dependencies between different configuration aspects of S3 buckets including bucket policies and versioning. Creation of an orchestration via API, SDK or scripts may be a bit cumbersome when needing to account for additional configuration parameters.

Where AWS CloudFormation Fits In

The AWS CloudFormation (and also HashiCorp’s Terraform) script provides yet another way to create S3 buckets. Both of these methods offer a way to create buckets declaratively at scale and manage the underlying dependencies automatically (as dependent resources are created in a specific order). We just specify the bucket properties or characteristics and CloudFormation creates those in the required order.

CloudFormation provides a great way to create an infrastructure for creating an S3 bucket. In this post, we’ll start with a simple way to create an S3 bucket and add additional features as we progress further. We can use the AWS management console or the AWS command-line interface (CLI) to create AWS resources using CloudFormation scripts. In this blog, I am going to provide step-by-step instructions for creating S3 buckets using the CLI.

S3 Bucket Creation Prerequisites

In order to use this feature, you will need to install AWS CLI in your laptop, and create a programmatic access key and secret ID.

- Steps for downloading and installing the CLI

- Based on security best principles, AWS also recommends creating a user with least privilege for interacting with the AWS web service. For this purpose, an IAM user that has the privilege to invoke CloudFormation services and ability to create, list and upload files to S3 service should be sufficient.

S3 Bucket Process

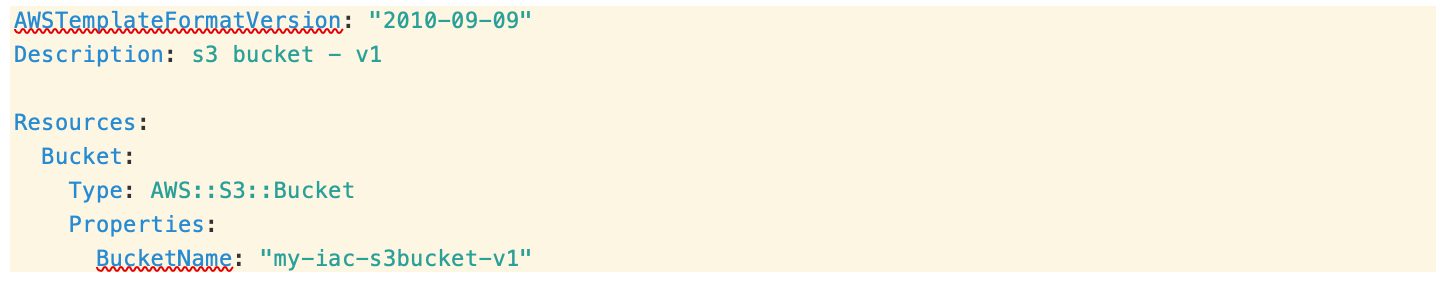

Let’s start with a basic (“hello world”) CloudFormation script for creating an S3 bucket:

The above CloudFormation yaml script starts with the specification of AWSTemplateFormatVersion as 2010-09-10 and a short description of the script. The template version is a mandatory parameter and is used by the Amazon CloudFormation service while interpreting the script. The section labeled “Resources” is another important section specifying the type of requested resources. The only parameter required for creating an S3 bucket is the name of the S3 bucket.

The CloudFormation script can be executed by typing an AWS CLI along the line (As discussed earlier, we can also upload the CloudFormation script via the AWS management console):

aws –profile training –region us-east-1 cloudformation create-stack –template-body file://s3.yaml –stack-name my-iac-s3bucket-v1

It should respond with the following type of response, where accountId is the 12-digit AWS account identifier:

{

“StackId”: “arn:aws:cloudformation:us-east-1:<accountId>:stack/my-iac-s3bucket-v1/ac96e6a0-897d-11eb-9932-0e43f2110cc5”

}

After a few minutes, you should see a new entry in the CloudFormation stack and a corresponding S3 bucket would be created in the AWS account. Note that the name of the S3 bucket should be unique globally.

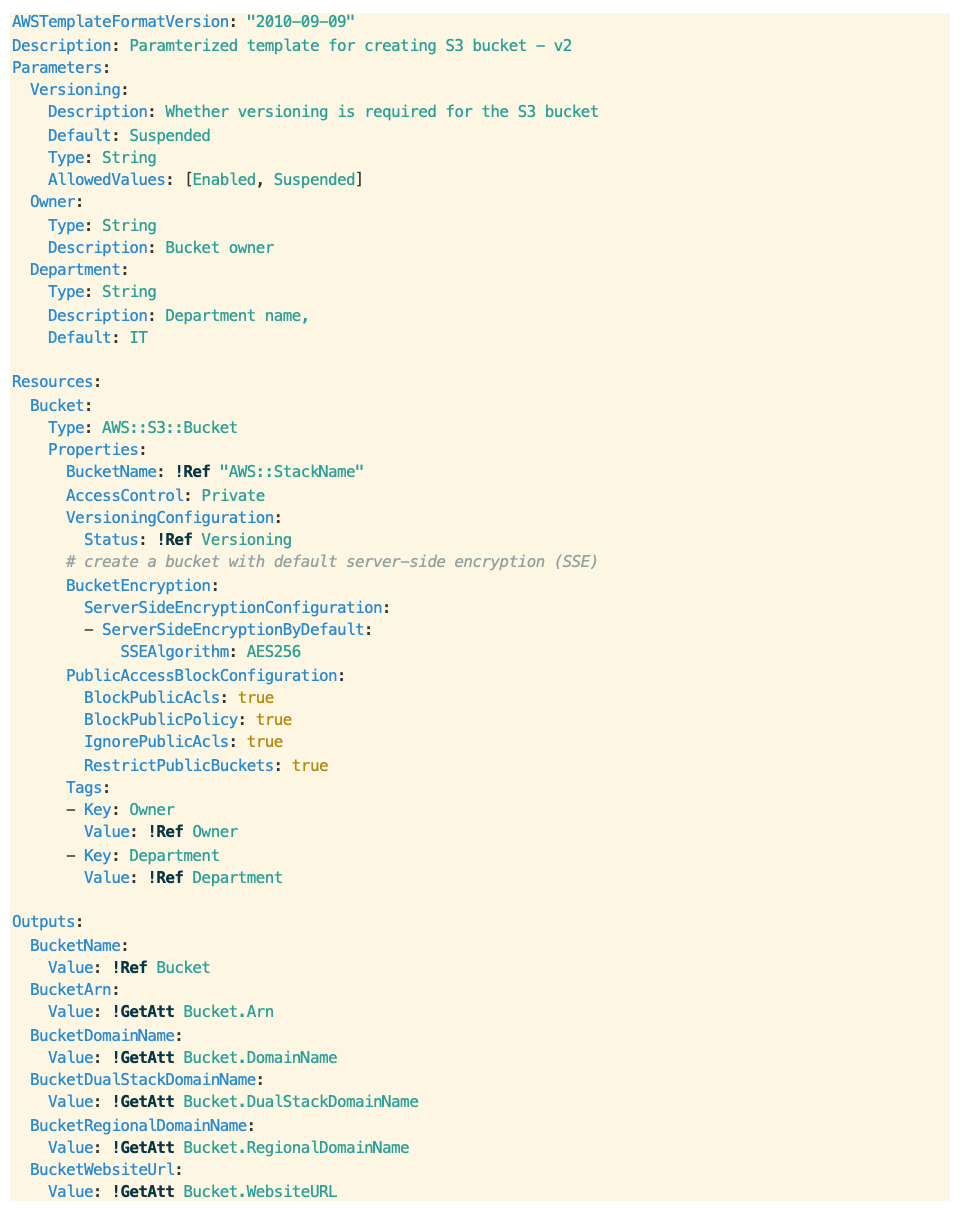

The above script is simple and a good starting point for learning CloudFormation scripts. However, it’s barely usable, as the bucket name is hard-coded in the script and there is no other parameter (especially security aspects of S3 bucket, which are important for making the bucket production ready). Here’s the second version with a few enhancements.

Additional Template Options

We have added a number of additional template sections and properties in this template:

The “Parameters” section of the CloudFormation template allows you to input user parameters to the template. Here we have specified three parameters: Versioning, Owner and Department. Versioning is an optional parameter, which controls whether the S3 bucket should maintain Object versions. Allowed values for this parameter are “Enabled” or “Suspended” (default). This allows you to revert to previous versions — for example, for backup and recovery purposes when the latest version is accidentally deleted — or for cross-region replication of S3 buckets. The parameters Owner and Department serve as the tag metadata for identifying the bucket owners, especially in a shared environment where the same account is shared by multiple users.

We have also updated the script to use the stack name as the name of the bucket. It’s generally a good idea to have one-to-one mapping between the CloudFormation stack name and the corresponding resource name. In this way, it’s possible to control the name of the S3 bucket via user inputs. The bucket property VersioningConfiguration in the resource section configures Object Versioning based on the user provided value of “Versioning.”

Security Aspects of S3 Bucket

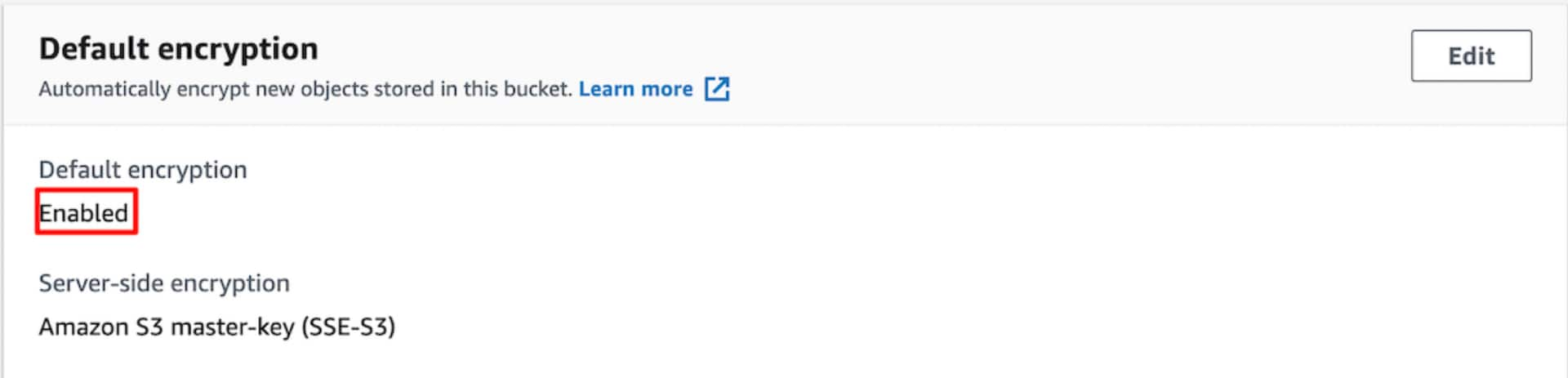

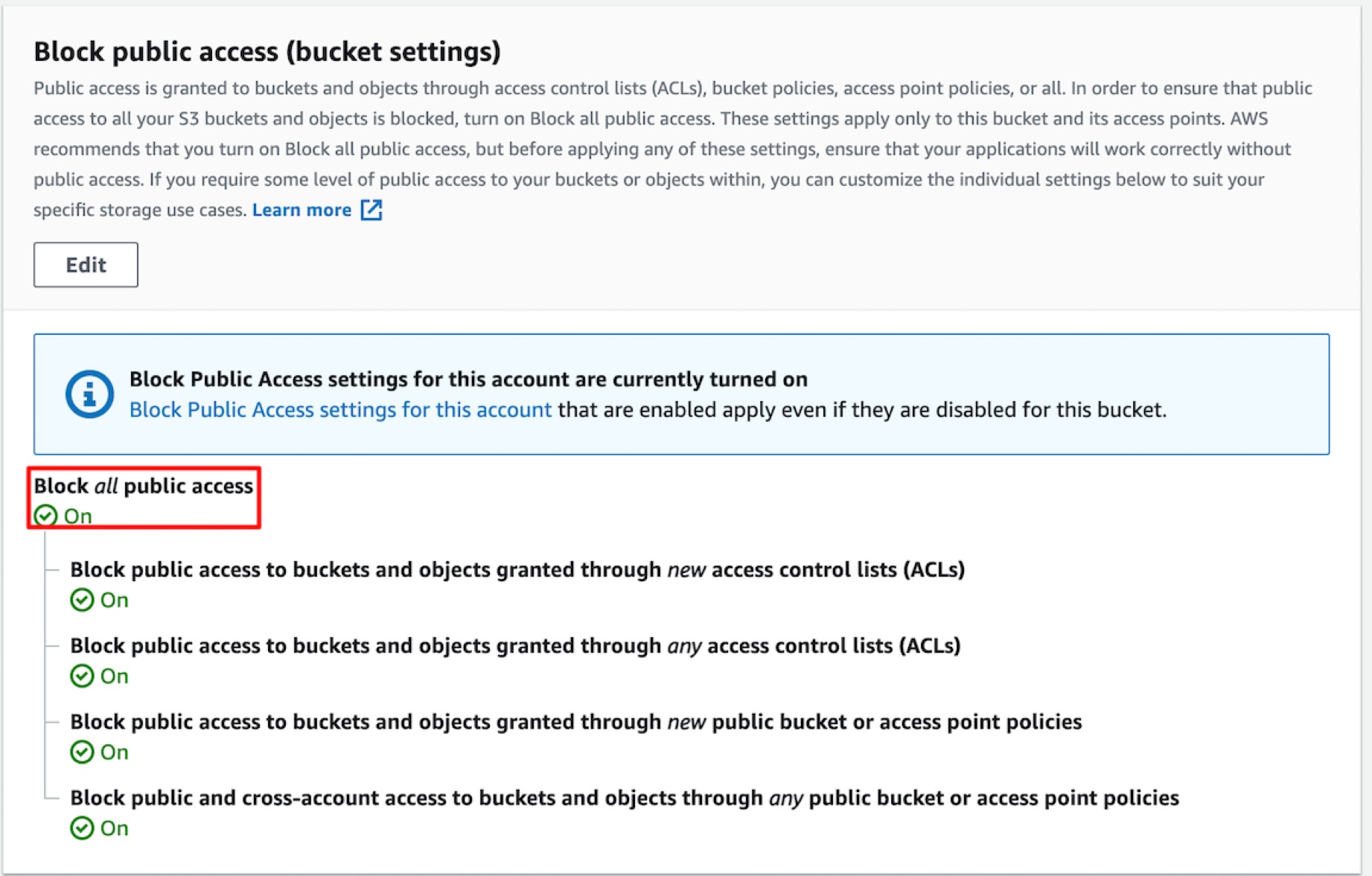

The next three parameters configure various security aspects of the S3 bucket. The S3 bucket is not publicly accessible and the objects inside the buckets would be automatically encrypted by S3 service using a key managed by S3. We are explicitly blocking public access policies and access control lists for the bucket.

And here is how you would invoke the script via CLI:

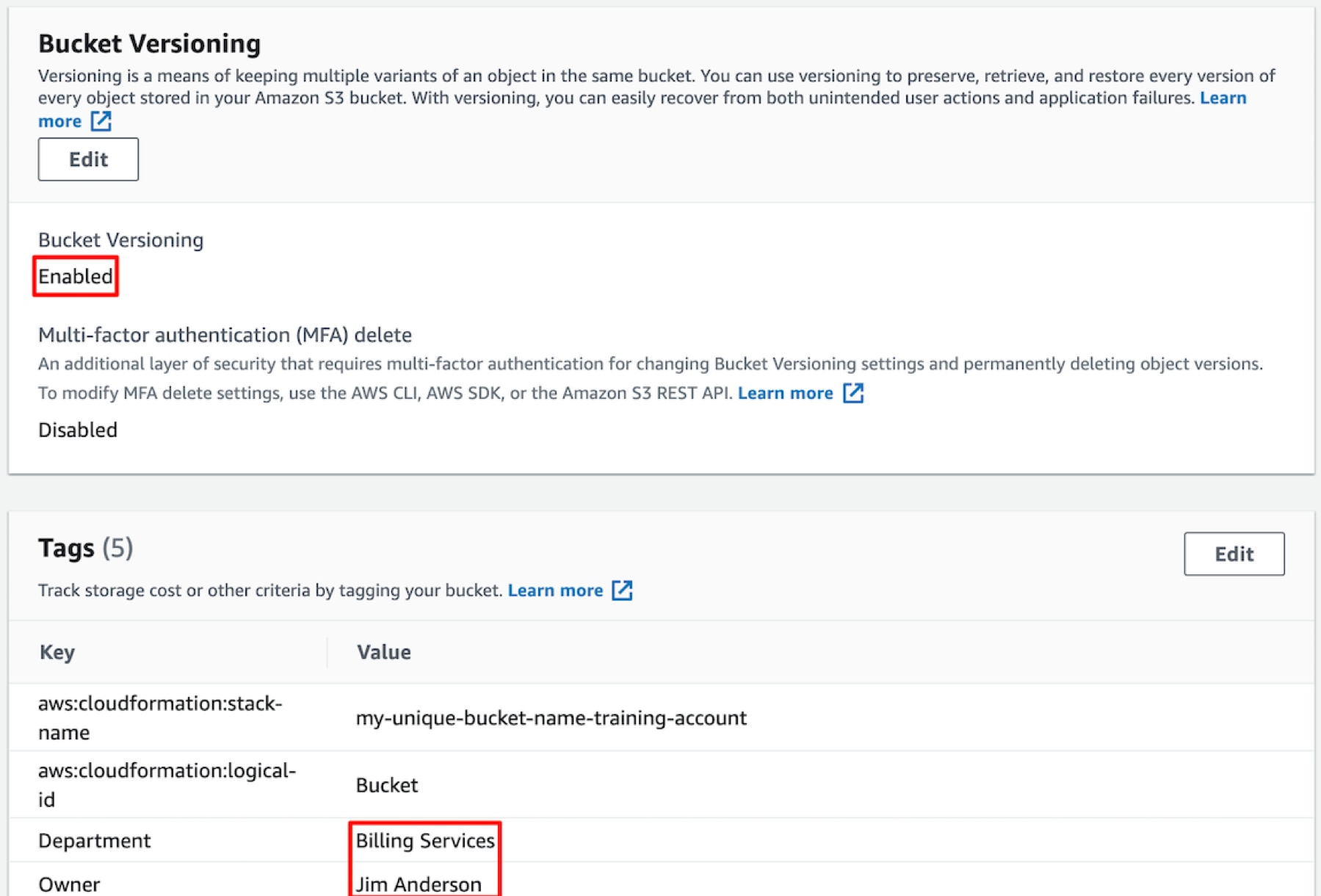

% aws –profile training –region us-east-1 cloudformation create-stack –template-body file://s3-v2.yaml –parameters ParameterKey=Versioning,ParameterValue=Enabled ParameterKey=Owner,ParameterValue=’Jim Anderson’ ParameterKey=Department,ParameterValue=’Billing Services’ –stack-name my-unique-bucket-name-training-account

{

“StackId”: “arn:aws:cloudformation:us-east-1:<AccountId>:stack/my-unique-bucket-name-training-account/266b0cf0-8c34-11eb-8c7f-0a55d4967de1”

}

Once the CloudFormation stack is created, you can go to the S3 section of the console and verify that the S3 bucket has been configured properly:

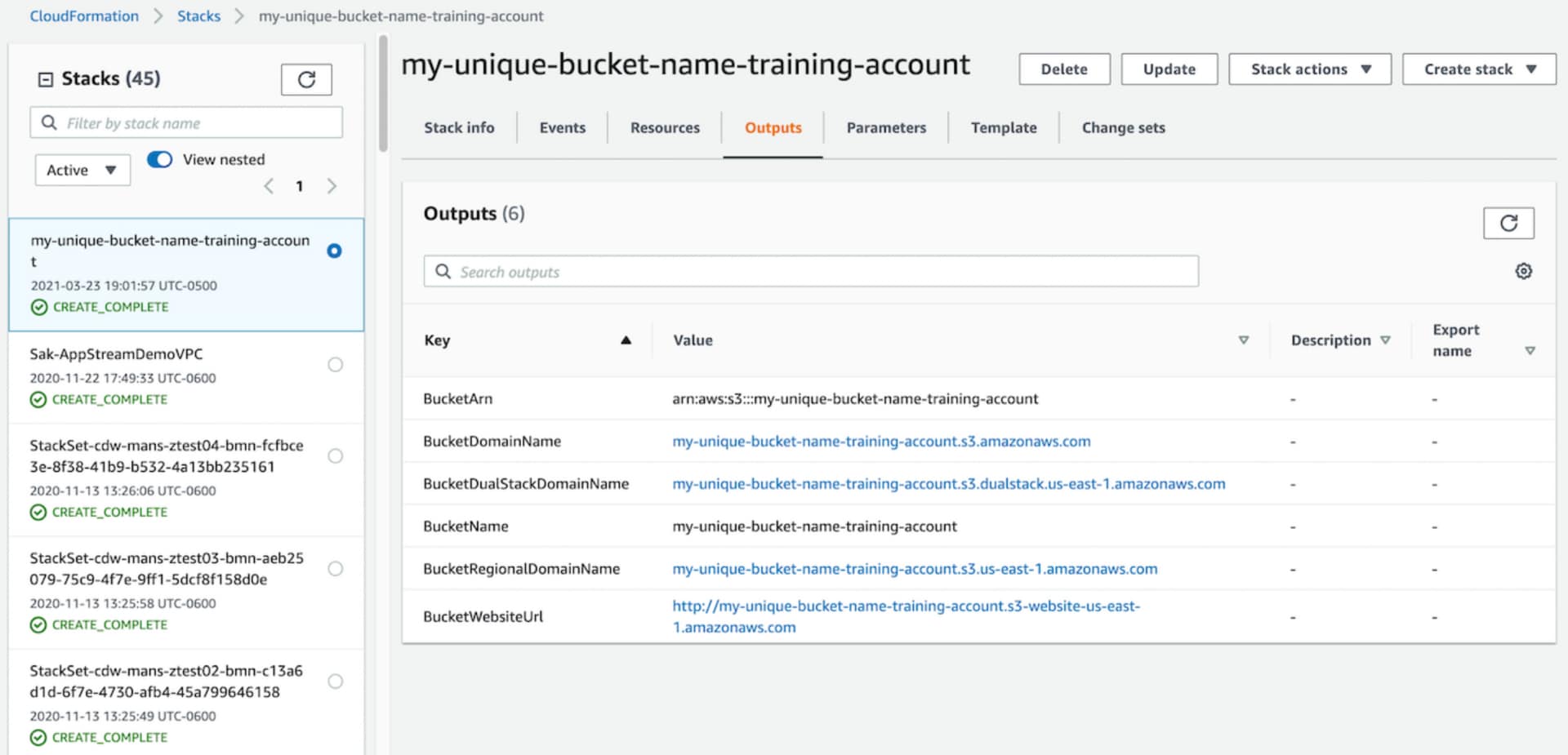

The template also has an Output Section, which can be used to view various parameters of the configured bucket (via CloudFormation):

To summarize, CloudFormation provides the ability to create Infrastructure in AWS using code via a declarative syntax.

CDW’s AWS Professional and Managed Services

CDW has a mature AWS practice both for professional and managed services areas. We have highly qualified teams consisting of solutions architects, delivery engineers, technical account managers (TAMs), and operations and DevOps engineers. All of the technical folks have achieved 100 percent AWS certifications at associate, specialty and professional levels in their practice area.

Within our managed and professional AWS practice areas, we utilize a number of these scripts in order to ensure that resources in our customers’ AWS accounts are created with high consistency using pre-tested scripts. This allows our engineers to make the AWS environment ready for the customer in a short time, allowing them to focus on solving their business challenges. CDW professional and managed services can assist your company by providing thought leadership to help you in your company’s cloud journey.

Reach out to your account managers or AWS sales team to discover the value CDW can bring in to assist you in your cloud journey.

Yagna Pant

CDW Expert