October 19, 2021

Navigating a Manual VM Import to AWS

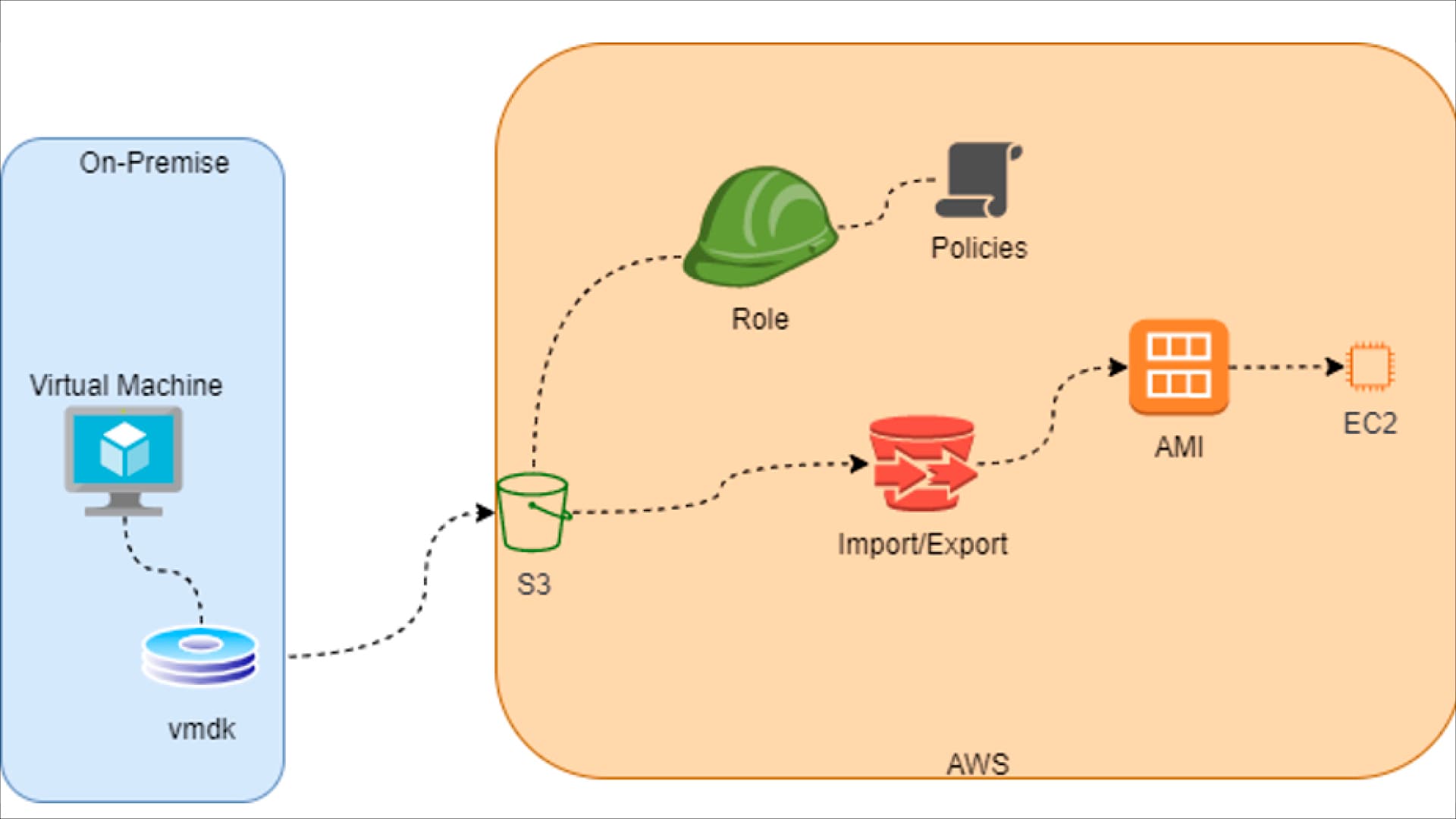

Guidance on moving on-premises virtual machines to AWS as Amazon Machine Images (AMI).

Arun Daniel

I have frequently been asked how to manually import on-premises virtual machines into AWS, without using other services such as CloudEndure or AWS Migration Services. Some organizations may want a quick and dirty way to get the source to the destination without spinning up any extra appliances, going through Change Control, or just getting to the end goal faster compared to the extra layers offered by other services.

Discover how CDW services and solutions can help you with AWS.

VM Import/Export

VM Import/Export enables you to import virtual machine images from your existing virtualization environment (VMware, Hyper-V, etc.) to Amazon EC2. This enables you to migrate applications and workloads to Amazon EC2, copy your VM image to Amazon EC2 or create a repository of VM images for backup and disaster recovery. As there are other services to do the heavy lifting, this article will shed light on what it takes to convert an on-premises virtual machine to AWS as an Amazon Machine Image (AMI).

Pre-Requisites for VMware Image:

- Remove VMware tools if installed.

- Disable AV or IDS applications.

- Disconnect CD-ROM drives.

- Enable DHCP.

- Enable RDP and modify OS firewall rules to allow RDP.

- Install .NET Framework 4.5 or later.

AWS CLI

Silent Install:

To silently install AWS CLI on a computer with internet access:

- Open PowerShell.

- Run msiexec.exe /i https://awscli.amazonaws.com/AWSCLIV2.msi /q .

Confirm:

To confirm AWS CLI has been successfully installed:

- Open PowerShell.

- Run AWS---version

- Output should show the version that was installed.

AWS CLI Configuration

AWS Profile:

To create your AWS profile with your secret key and secret access key:

- Open PowerShell.

- Run AWS configure

- Input your secret key and press enter.

- Input your secret access key and press enter.

- Input your default location, “us-east-1” as an example, and press enter.

- Input your format, JSON as an example.

To confirm your AWS profile was saved:

- Open PowerShell.

- Run: cat c:\users\<your login name>\.aws\config and press enter.

a. This will show general properties for your default profile. - Run: cat c:\users\<your login name>\.aws\credentials and press enter.

a. This will show your credentials, which includes your secret key and secret access key.

S3 Bucket

In order to save the vmdk (or vhd) file for your virtual machine, you will need to create a bucket in AWS. Each bucket has to be unique across all of AWS (not just your account) and has to be in lowercase.

To Create a Bucket

- Open Powershell.

- Run aws s3 mb s3://nameofmybucket/import .

a. nameofmybucket is an example name; please substitute for something unique to you.

b. /import is a ’folder’ (S3 doesn't really do folders, but that is another discussion) in the 'nameofmybucket' bucket; this will be the location of the virtual machine file.

Copy to Bucket:

- Open PowerShell.

- Browse to the location of your vmdk file.

- Run: aws s3 cp 'locationOfvirtualMachineFolder\2019.vmdk' s3://nameofmybucket/import/ .

- Wait for the file to finish uploading.

Import Roles and Policies

In order for the import service to work properly, certain permissions must be created for the service. Three files will be created for this task:

- containers.json – this file contains the location of your vmdk file in AWS S3. You can use vmdk, vhd, ovf files.

- trust-policy.json – this file provides the VM import service to assume the role for your account.

- role-policy.json – this file provides the permissions to access the S3 bucket holding your vmdk file, which will be linked to a role.

Code

1. Create a file called containers.json and copy and paste the contents below into that file, changing the contents of the bold entries to match yours:

[{

"Description": "First CLI task",

"Format": "vmdk",

"UserBucket": {

"S3Bucket": "name of your bucket",

"S3Key": "import/name of the vmdk file"

}

}]

2. Create a file called trust-policy.json and copy and paste the contents below into that file

#trust-policy.json

{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Principal": { "Service": "vmie.amazonaws.com" },

"Action": "sts:AssumeRole",

"Condition": {

"StringEquals":{

"sts:Externalid": "vmimport"

}

}

}

]

}

3. Create a file called role-policy.json and copy and paste the contents below into that file, changing the contents of the bold entries to match yours:

{

"Version":"2012-10-17",

"Statement":[

{

"Effect": "Allow",

"Action": [

"s3:GetBucketLocation",

"s3:GetObject",

"s3:ListBucket"

],

"Resource": [

"arn:aws:s3:::637619337672416743/import",

"arn:aws:s3:::637619337672416743/import/*"

]

},

{

"Effect": "Allow",

"Action": [

"s3:GetBucketLocation",

"s3:GetObject",

"s3:ListBucket",

"s3:PutObject",

"s3:GetBucketAcl"

]

}

Roles

Create roles based on the files you created above:

- Open PowerShell.

- Browse to the location of the files above.

- Run: aws iam create-role --role-name vmimport --assume-role-policy-document file://trust-policy.json .

- Run: aws iam put-role-policy --role-name vmimport --policy-name vmimport --policy-document file://role-policy.json .

Import VMDK to AMI

- Open PowerShell.

- Run: aws ec2 import-image --description "Windows 2008 VHD" --license-type BYOL --disk-containers file://containers.json .

- The above will provide you with a task-id, which you can use to view the status/progress:

- In PowerShell run (replace highlighted with the ID you are provided from above): aws ec2 describe-import-image-tasks --import-task-ids import-ami-1234567890abcdef0 .

Launch AMI

Once the conversion is complete, you can:

- Log into the AWS Console.

- Near the top, search for EC2 and click on the link shown to head to the EC2 administration console.

- Click on Launch Instance.

- Select My AMIs from the left pane.

- Find your AMI and click on Select.

- Continue on as with any EC2 provisioning picking the specs, subnets, security groups, etc.

Arun Daniel is a Sr. Consulting Engineer for Data Center and Cloud Services at CDW with more than 20 years of experience designing, deploying and managing all aspects of data center and cloud services. For the past 10 years, his primary focus has been migrations from on-premises data centers to Amazon Web Services. Arun holds numerous AWS certifications, as well as HashiCorp, Microsoft Azure, VMware and Cisco certifications.